The trouble with analogies

It's not that simple

One way to think about LLMs and the current state of AI is that these models are, to varying degrees, reasoning engines, and that intelligence will become some kind of commodity. “Intelligence on tap”. And to some degree, that’s not a terrible analogy.

But let’s extend it a bit. Electricity is a commodity, right? How do you consume it? Not really directly in its raw form, that’s usually pretty dangerous, and you have to be an expert to do it. So, we usually consume it via some packaged mediation - we charge up our phone, run our toaster or tv, or drive our car. Yes, the electrons are a fungible commodity (though some sources cost more than others - which is not a bad analogy to models and capabilities right now), but consuming them involves some thought, expertise and, usually, engineering. So, perhaps “intelligence becomes a commodity” will be something like that?

Or is it more like a scarce commodity, like gold? Yes, fungible, but of limited supply, and whoever hoards it has some kind of advantage? (I doubt this one). Or like wheat? Useful, mostly interchangeable, but perishable? I don’t know … I suspect the analogy stops here.

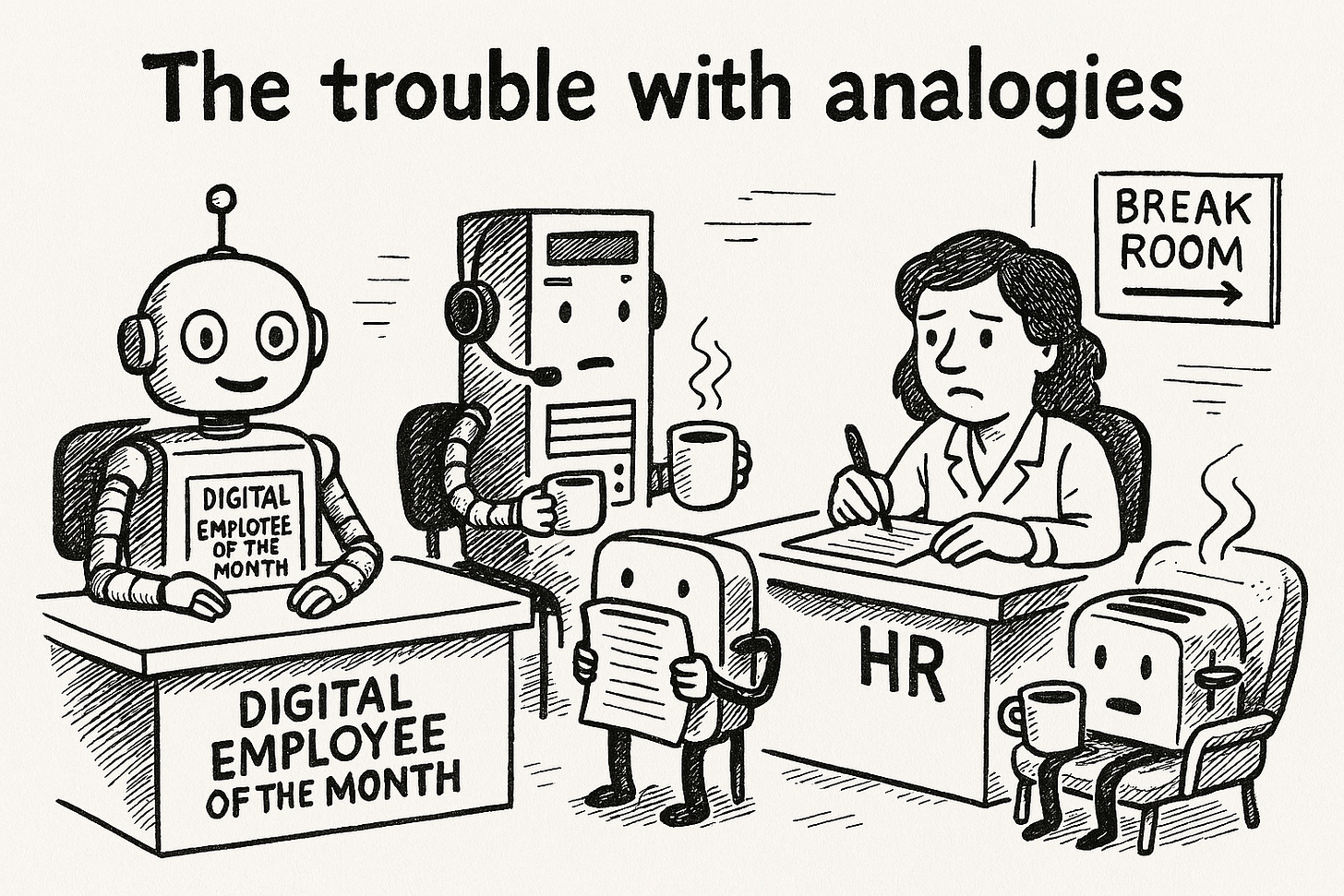

Here’s another one: AI is like a digital worker. Is it? In some ways it is - better systems can do tasks independently now (for example, I took the recent 157-page PDF of Bill Gate’s early Altair assembly and had Manus transcribe it into actual assembly - which took the model about an hour to do!). But I’ve started to see really silly extensions of this analogy - almost literally talking about them as humans, needing management, hiring systems, HR, etc. Thinking of them as workers is helpful—until we start building digital HR departments, and then it gets really counterproductive. (do they need digital bathrooms? mini kitchens?)

Also, this gets in the way of doing engineering, like using many copies of the same agent (program) to do a task in parallel, or restricting information in a way that would be repellent if we did it to humans, but which is fine for a program (c.f. Severance). What would the modern world be like if we had to “hire” electrons, or cared for their “feelings” or had concern about their “working hours”? It’s silly! They’re electrons! We make them, we use them, they do stuff! Sure, you can have a little Disney cartoon of them being “our little electric workers” but no one would take that literally and then impose HR mechanisms on them.

It’s understandable to work by analogy right now. I do it too! There is a lot going on - in fact, it was almost hard to write this essay for the first time, not because I didn’t know what to say but because I had too much to say. Navigating this complex and rapidly evolving space by thinking about analogies to something you understand well is a good strategy. But, like any good strategy, it becomes a liability if you aren’t aware of it or if you overuse it.

We are a bit stuck! We do need to think by analogy here, but they can lead us astray. The best you can do is be aware of this, and try to challenge constantly, to see if things really make sense when taken to extremes.

And yet, making and using analogies is one of the key attributes of cognition and intelligence. Is there any case where complex ideas are not explained by analogy?

If we remember, AI is an expansive term that encompasses older, symbolic AI (e.g. expert systems) to the as yet unrealized AGI and superintelligent AI. Will it be ubiquitous? Moore's Law and the ease of creating the substrate suggest that it will be. Lack of scarcity will drive its value towards zero. But which AIs? If scaling continues for LLM-based AI, the scarcity can be created for that AI, but do we need it? Perhaps smaller, device-based AIs are all that are needed. (I do not need a human expert navigator to give me directions.)

Can AI replace most "white collar" jobs? Maybe. But how will mistakes be corrected, especially those impacting humans? DOGE is leading the way on chaos where you take access to people out of the loop.