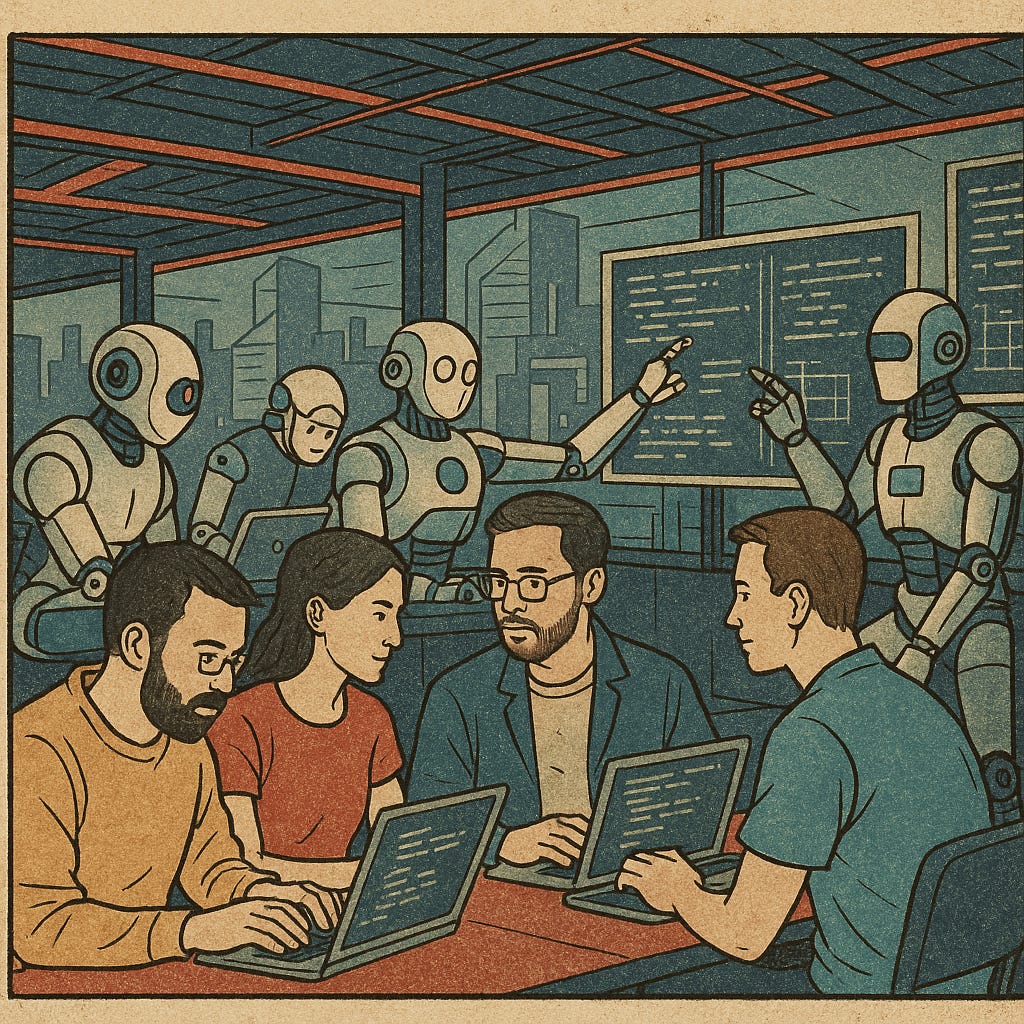

Working together is a fundamental part of the professional world, at least for most types of informational work. Anyone who has been part of any kind of company knows that learning to work with other people is a critical skill. Not having this ability usually is a real limit in how successful you can be. Students know this too - even though they’re in competition at some level (for grades and placement), students spend a lot of time formally and informally working together, which is good. AI tools are going to change the nature of this collaboration.

Why do we collaborate? There are fundamentally two reasons, and we can label them quantity and quality. Quantity is simple - it means “there is too much work for one person, let’s spread it out”. Quantity means “you have some information or skills that I don’t have (or vice versa), working together will make the outcome better/faster”.

Right now, we are just starting to get LLM-based tools that can act independently and reliably (people call these agents, but I like to think of them as tools - I don’t find it helpful to anthropomorphize). These tools are starting to be more and more reliable though, and it’s more possible to hand work off to them. Soon, they will be able to spin up copies of themselves if they want to work on a task in parallel. This chips away at the quantity problem - humans don’t “scale up”, but computers do. So, suddenly, we don’t need to work with a big team to do some kinds of big tasks.

This has really interesting side effects. One of the core ideas in the software world is the “mythical man month”, which comes from an old book of that title. It’s the observation that, for software (and other knowledge work teams), scaling up doesn’t always work, because communication overhead increases as the square of the team size (everyone has to talk to everyone else if you aren’t careful). But with AI agents who can coordinate or be coordinated on a large task, this problem starts to go away - a smaller number of human engineers can be much faster, because they don’t have to communicate as much to get work to happen.

Quality, our other reason for collaborating, is more complex. LLMs absolutely bring skills to the table, so in some cases, you can get higher quality “collaborating” with an LLM instead of a person. Not always - the information and context can often be as important or even more important. As LLM systems know more for us and are able to share more of it under our control, this will change too.

Is this change to collaboration good or bad? Yes! It will probably be some of both, as all things are. The good is that more people are empowered to do more work for themselves - I am always a believer that removing barriers to human imagination and energy is a fundamentally good thing (as long as we have good humans). Making it so that someone who might not have been empowered to be ambitious now is, seems pretty great to me.

On the other hand…we need to get along with each other. Working together is an important skill to have. It would be a shame to lose that. I suspect that what will really happen though (and I am seeing it in my own teams) is that we will actually find ways to work together more with the help of AI. People like each other, for the most part. We want to work together and we find ways to do it. My engineering team spent a little bit of time happily coding alone, and then very quickly started to look for ways to work together, share context, and continue to amplify each other.

I’m optimistic. But whether you are or not, it’s clear to see - collaboration is going to change, a lot.

I'll try to keep quiet from now on, but ...

My main concern is where the productivity accrues and how that destroys society by benefiting the rich. GenAI is essentially a cheap employee. If that benefit accrues to the each employee who learns to use GenAI, then those employees benefit.

If however more naturally GenAI benefits a company who then RIFs 10% of its employees to replace them with GenAI, the only humans (*not* entities, actual humans) who benefit are those rich enough to own stock. How many quarters has MSFT RIF'ed? How many will it continue to do so?

If a company increases its revenue by paying for AI while not paying for humans, should mankind receive no money?

RE: smaller number of human engineers can be much faster, because they don’t have to communicate as much to get work to happen

Why would we expect less communication needed to coordinate AIs? Or are you saying that for some problems the mythical man month is NOT mythical and we perhaps didn't fully explore that space because of overheads associated with hiring and managing humans?

BTW, there is a typo in the second paragraph ("Quantity means" instead of "Quality means") that threw me off all the way to the end. I wonder if a simple prompt of "check this text for typos and miscommunications" would have caught it.